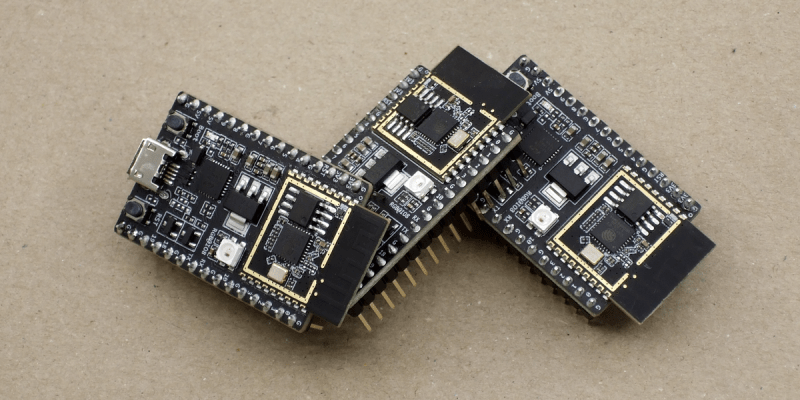

We just got our hands on some engineering pre-samples of the ESP32-C3 chip and modules, and there’s a lot to like about this chip. The question is what should you compare this to; is it more an ESP32 or an ESP8266? The new “C3” variant has a single 160 MHz RISC-V core that out-performs the ESP8266, and at the same time includes most of the peripheral set of an ESP32. While RAM often ends up scarce on an ESP8266 with around 40 kB or so, the ESP32-C3 sports 400 kB of RAM, and manages to keep it all running while burning less power. Like the ESP32, it has Bluetooth LE 5.0 in addition to WiFi.

Espressif’s website says multiple times that it’s going to be “cost-effective”, which is secret code for cheap. Rumors are that there will be eight-pin ESP-O1 modules hitting the streets priced as low as $1. We usually require more pins, but if medium-sized ESP32-C3 modules are priced near the ESP8266-12-style modules, we can’t see any reason to buy the latter; for us it will literally be an ESP8266 killer.

On the other hand, it lacks the dual cores of the ESP32, and simply doesn’t have as many GPIO pins. If you’re a die-hard ESP32 abuser, you’ll doubtless find some features missing, like the ultra-low-power coprocessor or the DACs. But it does share a lot of the ESP32 standouts: the LEDC (PWM) peripheral and the unique parallel I2S come to mind. Moreover, it shares the ESP-IDF framework with the ESP32, so despite running on an entirely different CPU architecture, a lot of code will run without change on both chips just by tweaking the build environment with a one-liner.

If you were confused by the chip’s name, like we were, a week or so playing with the new chip will make it all clear. The ESP32-C3 is a lot more like a reduced version of the ESP32 than it is like an improvement over the ESP8266, even though it’s probably destined to play the latter role in our projects. If you count in the new ESP32-S3 that brings in USB, the ESP32 family is bigger than just one chip. Although it does seem odd to lump the RISC-V and Tensilica CPUs together, at the end of the day it’s the peripherals more than the CPUs that differentiate microcontrollers, and on that front the C3 is firmly in the ESP32 family.

Our takeaway: the ESP32-C3 is going to replace the ESP8266 in our projects, but it won’t replace the ESP32 which simply has more of everything when we need it. The shared codebase and peripheral architecture makes it easier to switch between the two when we don’t need the full-blown ESP32. In that spirit, we welcome the newcomer to the family.

But naturally, we’ve got a lot more to say about it. Specifically, we were interested in exactly what the RISC-V core brought to the table, and ran the module through power and speed comparisons with the ESP32 and ESP8266 — and it beats them both by a small margin in our benchmarks. We’ve also become a lot closer friends with the ESP-IDF SDK that all of the ESP32 family chips use, and love how far it has come in the last year or so. It’s not as newbie-friendly as ESP-Arduino, for sure, but it’s a ton more powerful, and we’re totally happy to leave the ESP8266 SDK behind us.

RISC-V: Power and Speed

The ESP32-C3 shares the coding framework with the ESP32, some of the peripherals, and has about the same amount of memory. What’s different? The RISC-V CPU of the C3 vs. the Tensilica cores in the ESP32 and the ESP8266. So we thought we’d put them through their paces and see how they stack up in terms of processing speed and overall power use.

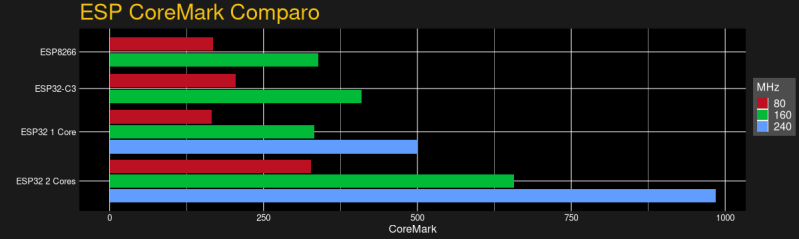

In terms of standard benchmarks for microcontrollers and other embedded devices, CoreMark is probably the go-to. And we found that it had been already ported to the ESP8266 and ESP32 by [Ochrin]. (Thanks!) CoreMark includes three tests: finding and sorting with linked lists to tax the memory units, running a state machine to test switch/case branching speed, and a matrix multiplication task to tax the CPU and compiler.

In terms of standard benchmarks for microcontrollers and other embedded devices, CoreMark is probably the go-to. And we found that it had been already ported to the ESP8266 and ESP32 by [Ochrin]. (Thanks!) CoreMark includes three tests: finding and sorting with linked lists to tax the memory units, running a state machine to test switch/case branching speed, and a matrix multiplication task to tax the CPU and compiler.

In practice, and mimicking our general experience with the ESP8266 and ESP32 frameworks, the code compiled without any hassle for the ESP32 and ESP32-C3. In contrast, getting it running on the ESP8266 was a hair-pulling few hours spent degrading versions of the RTOS framework, installing modules in Python 2 inside virtualenvs, and getting the set of PATHs and other environment variables just right. But we weren’t going to leave you without a proper comparo, so we burned the midnight oil.

The takeaway is that a single RISC-V core on the ESP32-C3 is marginally faster per MHz than a single core on either of the Tensilica-based devices. Of course, if you’re crunching numbers hard and using both cores of the ESP32, it’s in another league, and you know who you are. But if you’re running Arduino on the ESP32 and you’re not explicitly running the RTOS tasks yourself, or running MicroPython and not using threads, you’re probably running a single core on the ESP32 anyway. Modulo some small difference in having a free core to exclusively handle WiFi, you might not be much worse off with the C3.

While running this test, we also hooked up our super-sophisticated power measuring unit to the devices under test, a USB cable with three 3 Ω resistors and an oscilloscope. Of course, if you simply wanted the chip’s power specs, you could hit up the datasheet.

While running this test, we also hooked up our super-sophisticated power measuring unit to the devices under test, a USB cable with three 3 Ω resistors and an oscilloscope. Of course, if you simply wanted the chip’s power specs, you could hit up the datasheet.

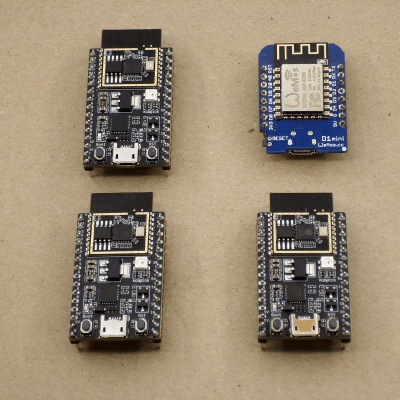

Instead, here we’re looking at the real-life performance of three different modules: the WeMos D1 mini for the ESP8266, the Lolin 32 for the ESP32, and our demo ESP32-C3-DevKitC-1, straight from Espressif. All were running with LEDs off, or clipped summarily with side-cutters in the case of the ESP32-C3 unit. (It didn’t make all that much difference, but you don’t know until you try.)

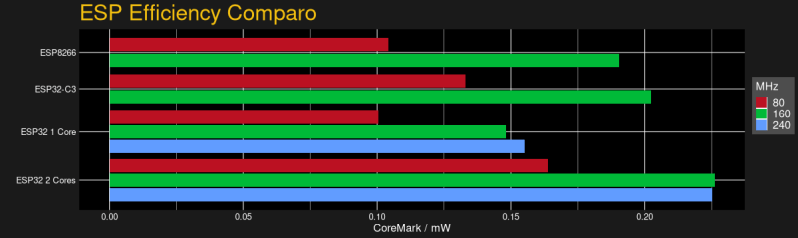

With power consumption data, we could also check out the modules’ power efficiency, measured in CoreMark score per milliwatt. Here, the ESP32-C3 does a bit better than the ESP8266, and somewhere between the ESP32 running one core and two cores respectively. This confirms what we’ve suspected for a while — if you want to save power, your best bet is to keep the chip sleeping as much as possible, and then run it full-out when it needs to run. If you’re doing that with an ESP32, use both cores.

And while our results are definitely significant and repeatable in terms of power and speed, they’re not game-changing. If we really needed to crush floats, we’d go for a chip that’s better suited for the task like an STM32F4xx or STM32F7xx, or those brutal NXP/Freescale 600 MHz i.MX ARM7 chips in the Teensy 4.0. If you’re buying an ESP-anything, it’s because you want the wireless connectivity, and it’s good to know that you’re not giving anything away with the ESP32-C3 on the CPU speed.

We hinted at it in the introduction, but the RISC-V nature of this chip, at least in terms of user experience, is no big deal. You code, compile, and flash just the same as you would with any other toolchain. The ESP-IDF makes using the new chip as easy as typing idf.py set-target esp32c3 and maybe idf.py fullclean for good measure. Then you go about your business. I must have swapped architectures 30 times in the course of this testing, and it’s literally that simple.

Of Peripherals and Pins

The first limitation you run into with an ESP8266 is that it doesn’t have enough GPIOs, or ADCs, for your particular project. While the ESP32 is a serious improvement in sheer GPIO quantity, once you’ve taken account of the pins with dedicated functions, or that are only input, you can end up pushing the limits of the chip easily. So you design in an external ADC chip and connect it via I2C, or you tack on a shift register and drive it with the blindingly fast I2S peripheral — something you can’t do with the ESP8266.

Sharing the peripheral set with the ESP32 will help alleviates some of these woes on the ESP32-C3, even though it has the same number of pins as the ESP8266. Heck, if you’re willing to allocate them, the C3 even has JTAG capabilities. And while the JTAG isn’t, a lot of the hardware peripherals are assignable to whichever pins you wish.

But we have to conclude that designing with the ESP32-C3 is still going to be a lot like designing for the ESP8266. I/O is limited. You’ll have to work with that.

Situating the ESP-C3

The ESP8266 started life as a simple AT-command-set WiFi modem, and a bunch of hackers proved that it had a lot more to offer. It’s sometimes hard to remember how difficult and expensive WiFi connectivity was before the ESP8266, but at the time, WiFi for $5 was revolutionary compared to WiFi for $50 – $100. Flash forward a few years, and the ESP32 is a competent microcontroller in its own right, with some cool quirky features. Oh yeah, and WiFi and BLE. We’ve come a long way in a very short time.

The C3 is really a blend of the two: a limited number of GPIO pins like the ESP8266, but with nice peripherals like the ESP32. If it’s priced to compete with the ESP8266, it will push that chip into retirement. But maybe it’s time.

The C3 is really a blend of the two: a limited number of GPIO pins like the ESP8266, but with nice peripherals like the ESP32. If it’s priced to compete with the ESP8266, it will push that chip into retirement. But maybe it’s time.

The ESP-IDF has grown on us, but it’s still nothing compared to the overabundance of examples for ESP-Arduino or the incredible ease of use of MicroPython. When the latter gets ported over to the ESP32-C3, with its significantly expanded memory over the ESP8266, that’ll be a tremendously inexpensive platform that will make many forsake ever compiling again. But when you need the speed of the native SDK, it’s nice to be able to lean on the extant ESP32 codebase, so an ESP8266 in ESP32’s clothing is a winner.

We’ve got pre-production samples, and Espressif is still working on supporting all the features of the ESP32-C3 in the IDF. Heck, you can’t buy an ESP32-C3 module yet anyway, so we’re stuck looking into our crystal ball a little bit. But the murmurs about pricing similarly to the ESP8266 make us take notice, and it’s certainly a worthy upgrade even at a small price premium, if that’s what the market will bear. At the same time, the ESP32-C3 is fundamentally less capable than the ESP32, so it’s got to come in cheaper than that. With ESP8266 dev boards selling for $2 and ESP32 dev boards selling for $4, that doesn’t leave much wiggle room, and we suspect some folks will just pony up for the ESP32s. So it’s hard to say how much the price really matters anyway.

But it’s nice to see RISC-V cores in more devices, not least because the standardized instruction set architecture — which essentially amounts to a standard set of machine-language commands — makes writing optimizing compilers easier and faster. For the end user, it doesn’t matter all that much, but if saving money on IP licensing fees is what allows Espressif to include a more modern peripheral set for the ESP8266 price, then we’re all for it.

Fwiw, we *might* have a solution in the final mass-production ESP32C3 when it comes down to not spending IO-pins on the JTAG port. Keep your fingers crossed that it makes it through verification *this* time….

*zombie voice*: ” Piiins, piiiiiiiiins.”

On that note: the C3 actually does have 4 more GPIO pins than the 8266 (but the 8266 has a separate ADC-only pin), so if you only need a few extra…

cJTAG? :P

Hope you’re not using codasip IP, it’s riddle with small bugs and errors, not the best to work with…

No cJTAG. We looked at it and decided a solution you can immediately plug into your PC via USB is a better solution that doesn’t cost that much more chip space. Obviously, that is no guarantee it isn’t riddled with small bugs and errors all of its own :P

Fully confident in your capabilitiies ;-)

I find myself wondering why, in 2021, brand new microcontrollers are being fabbed with less RAM than my 1985 vintage IBM-XT had (256k, for those who are curious). It seems to me that 40k or even 400k is a rather tiny amount for such a capable little processor. I realize that MCUs can do a lot with a little, but only 40k?

RAM is pretty costly because it easily eats up lots of your silicon space. (From memory, I seem to remember something like half of the ESP32 die being occupied by RAM.) Plus, there are other things involved as well – with larger RAM, you can’t make it single-cycle access anymore and need to think about caches, which means more complexity elsewhere. Off-chip RAM is an option (e.g. using a stacked die), but especially DDR’ish solutions are pretty noisy and would wreak havoc with the WiFi subsystem. We do have something like PSRAM – you can add something like 8MB of pretty fast octal SPI PSRAM to an ESP32S32, if you really need the memory.

Mostly: area cost and leakage. The later is the killer for BLE chip, albeit Wifi renders this point irrelevant.

for a simple microcontroller there is often not much need for a lot of ram. 256B might be plenty for a serial interface convertor, an environmental sensor with hysteresis, a programmable fan speed controller, etc.

some things require a more specialized microcontroller. maybe one with a lot of I/O pins because the microcontroller is gathering or translating a lot of external data. Even then it probably doesn’t need a lot of RAM, 4K might be more than enough depending on what you need to do with the data. Generally you don’t need lots of big buffers, but once in a while you do need that.

Once you start getting microcontrollers that have little webservers inside them, then that’s a rather specialized need and a lot of RAM makes it way easier to code for. Another important niche is image processing. But I’d want a microcontroller with a LOT of RAM and a camera interface (CSI), then things start to step into the territory of system-on-a-chip.

Comparing RAM of microcontroller with PC is not appropriate. PC uses a file system where by the program has to be loaded into RAM to execute. The PC OS is also loaded into RAM to be executed. In microcontroller, there’s no OS. Your program runs out directly from ROM or Flash memory and may not even require any RAM. RAM may be used for temporary storage.

256k of ram on an early pc was a board full of chips. Putting that megabytes of SRAM on an MCU die is still fairly expensive. Some of the Espressif modules have some PSRAM on a separate die inside the module. That is slower to access, but cheaper. The next thing after that would be something like a raspberry pi, with its larger footprint and higher cost and power consumption.

MCU’s run their programs directly from Flash: no need to first load all software into RAM. That’s why an MCU needs much less of it.

Because humans have evolved to write more efficient codes with better compilers with more efficient instruction sets.

They are not necessarily equivalent one is a CPU and another is an MCU. To make an erroneous comparison you might normalizing the price adjusted for inflation based upon differences in chip fab processing capabilities real estate and memory.

No word on which RISCV variant is implemented? RV32IMA?

Any hint if it is a in house development or outsourced IP like on GD32V?

RV32IMC. We have the sources to the IP, so we can do other interesting things with it in the future if we want to.

Would adding F (floating point) and even maybe D (double precision) have added that much more chip area? It does make programming easier a lot of the time.

Probably. But I wasn’t able to find numbers and I’m not setup with any RISC-V stuff at the moment to check. I’d love if someone could give you a real answer. My wild guess is 10% to 30% more area, based on intuition and experience and no real data.

I’m more of the type that feels that having even an integer hardware multiple and divide to be a bit of a luxury. And we used to do floating point in Z80 or 6502 asm back in the day, it wasn’t too hard to write yourself once you have a test suite that exercises the corner cases of floating point arithmetic.

In my professional life I always had the luxury of kicking up the computationally difficult things to the system-on-chip and let a proper superscalar CPU (ideally with a vector unit or SIMD) take on the tough problems. Where things got dicey was a touch screen sensor that had to preprocess the data in order for it to fit in the narrow bandwidth of the pipe upstream. (the host driver still did a lot of the work, but some signal processing just had to be done in the uC)

> My wild guess is 10% to 30% more area

No it has to be way way less than that. Maybe 10-30% more area for the cpu itself, but most of the chip has to be used by stuff like the ram array, which is 400KB i.e. 3.2 million bits, dunno how many transistors per bit. The FPU is likely to be less than 1% of that.

> Maybe 10-30% more area for the cpu itself, but most of the chip has to be used by stuff like the ram array, which is 400KB i.e. 3.2 million bits, dunno how many transistors per bit. The FPU is likely to be less than 1% of that.

It’s a bit more than that. As a reference point, you can check this paper from the PULP team: https://pulp-platform.org/docs/publications/08715500.pdf. They discuss FPU sharing between cores particularly to reduce the area cost of the FPU. According to the table near the end of the paper, 8 cores occupy 400k um^2 (50k um^2 per core), while the shared FPU occupies 64 um^2 at 40LP. Zero-RI5CY is a rather small core, though. Comparing to RI5CY, which is about twice as big, the FPU would still add at least 50% to the CPU area.

What I mean is that the FPU is small compared to the memory array and other stuff on the chip. The CPU is also small in comparison to that stuff. Yes the desire for FPU is mostly for DSP functionality, which is useful in a device like this. It should have MAC instructions and the like, of course.

What’s your favorite though, coke float or rootbeer float? https://github.com/oprecomp/fp-soft

FP is a big burned on 8 bit processors but it seems to me that on 32 and 64 bit architectures that simple FP hardware it is not that much of an advantage. FP routines are surprisingly small and fast on RISC machines. Where you really see a difference is with DSP hardware that can do sum-of-products on a pair of data vectors. If you don’t need fast DSP then integer is great, isn’t it?

The software situation is much better than I expected.

The real power of the RISC-V core for me is that it has a more mature LLVM backend than Xtensa, so it’s possible to code for these chips with more languages, eg. Rust.

There are already some projects to code Rust for the “older” ESP32 cores, but all examples I have seen end in the “Hello World”/Blinky example. I suspect there might not be tasking available. Could it be someone has ported RTFM? I could not find info.

I really would MASSIVELY LOVE being able to code in Rust for these WiFi MCUs, I hope it gets real soon.

The LLVM support for the tensilica CPU was always an issue, and Rust support is thin on the ground even now. For RISCV, the story should be much clearer.

This is a really important point which I was just amazed wasn’t mentioned in the article.

Not having even the possibility of Rust support on the older ESPs means stm32 wins out every time, but for some applications an ESP might suit better, which always seemed like a bit of a shame.

First the processor platform, then the software support…

Be patient, once the platform becomes popular, Rust (and any other worthwhile tool) will be ported to it.

No thanks, C and GCC are fine.

I suspect for the original ESP32 it’s replacement will be the ESP32-S3

I think it’s been announced but not released yet

I’m wondering if they’ll do a RISC-V version of that at some point

I’ve recently been experimenting with Rust / ESP32 which is pretty awesome.

Although ideally I’d like to be able to do SDMMC and Jtag at the same time (which currently isn’t possible since they share the same pins, although SPI SD access will work over VSPI I think)

Re. SDMMC and JTAG: ESP32-S3 should resolve this, as SDMMC peripheral signals can now be assigned to any I/O pins using the “GPIO Matrix”. Plus it might have a built-in “USB-JTAG” bridge that Sprite has hinted to above.

So long as the USB peripheral remains fully programmable, not limited to just a few specific use cases.

There are NO USB on the SoC nor the module itself. If it is not in the SoC datasheet, it is not there.

There might be the USB JTAG bridge on the break-out board for the module.

FYI: The module is the one with the Gold retangle + a black piece sticking out of the breakout board.

As for SDMMC, one of the SPI can be remapped. The JTAG debug interface is not remapable for obvious reasons – it has to stay put so that you can always reprogram the chip.

There’s no USB on the datasheet *yet*. It might never appear, but with a bit of luck, there will be some changes when the mass manufactured C3 comes out.

To be honest, when I see Sprite_tm is involved. I am not worried about what you are describing. I think he has proven himself often enough for the ones who know him to trust what he/his team designs :-).

🤣 that is a hilarious comment coming from someone named Bill Gates!!

chinese people are injecting their microchips in our devices to give us autism, beware the truth1!!11!!

This got reported for moderation, but I think it’s obviously enough of a joke, right? (I mean, the 1!1!!? Really?)

Bill Grates??? From the famous Michaelsoft??

Honor 2 be in ur prezence sir.

Have you audited the wireless connection on an ESP32?

I hate the binary blobs as well, but wouldn’t go so far as to claim espionage.

Open source wifi stack this time?

“my company creates value selling our experience with wifi systems, I think we should give away our designs for free, sounds like a reasonable business decision to me!”

Almost all of the value will be the IP core mapped in the chip. The software side of a WiFi system is not very complex (as an example, look at the ath9k softmac driver).

I wonder if they made that core themselves or bought one of the (not so many) options. If it is purchased they probably can’t give any info on it.

Does the ESP32-C3 allow flashing over USB?

No guarantees, but unless we find breaking bugs that it from working, the mass-manufactured model will.

Awesome, thanks!

I’m really interested to see how it does for power consumption- which always seems the achilles heal of the ESP32. Whilst the ESP32 has a good deep sleep mode, wakeup takes so long that it eats lots of power and saps how long a battery-powered project can run. Maybe the RISC-V core will be better for this purpose?

Any additional information on the IP used for the RISC-V processor core?

Would be interesting to see how the core compares to other chips. For example, the GD32VF103 in the Longan Nano uses the Bumblebee core from Nuclei and Andes (apparently built around the AndesCore N22 and the Nuclei N200). Also, the Bouffalo BL602 in the PineCone seems to use SiFive’s E24 core.

one of the main things is that it has the RMT – as that is very very useful (and not for ir)..

American components, russian components all made in taiwan!

I think they the RISC-V as the default option with the S2 ULP. The S3 has it as it’s main core. I expect that the next chip will be dual core assuming they can find the appropriate open IP.

Fun times ahead.

S3 will be Tensilica still, as we spent some time (as in, it happened in parallel with us bringing the RiscV stuff up) to add AI instructions and other fanciness to it. We’ll have more RiscV chips in the future, though, and multicore certainly is on the table.

Would be great if esp32c3 also had a ULP (risc-v of course, though something simpler seemingly would be fine).

why US people interested in a chip from China ?

.

NO MORE MADE IN CHINA !!

Because there are still few that react with their brains and not their fears.

My company makes all their chips in Taiwan. It’s tough to be competitive if you add politics to your decision making.

I am very excited.

Geesh, more chip combos to research & cost effective analyse, need a not so good looking secretary who’s IT literate ;-)

Thanks for post, cheers

I still only use $4 NodeMCU/ESP8266 for many home automation and robot control projects. They are mounted on two 17X10 protoboards for lots of jumper wire space. The I/O can be hugely increased with expander chips like MCP23017 if needed. The I/O can be used for I2C and OneWire buses to communicate with many, many devices on very few I/O. RTC and MCP23017 on same two I2C outputs. Multiple temperature sensors on a single OneWire. I use many, many libraries in the code that took awhile to find and get working. That’s why I avoided ESP32 at the time. Wireless uploads to remote devices and free web page HMIs. I don’t get the hype around the new Arduino Pico. It can’t do either one.

I don’t get the hype around the pico either, but it’s fine to want a board with no wireless.

Does the ESP32-C3 have a watchdog? The ESP8266 would hang regularly and needed a manual reset. The watchdog that really helps if you want to do proper domotics or IOT.

I never had issues with ESP8266 freezing like this unless I have problems with my code.

But yes, ESP32 series has watchdog.

Small mistake: Teensy 4.0 doesn’t use “ARM7 i.MX” but Cortex-M7 i.MX.

I would gladly buy a similarly powerful & inexpensive chip from an American manufacturer. Can you please point me at one?

You would need to convince US manufacturers to embrace open architecture cores in their designs as a first step. Second, we all know that none of them actually manufactures a volume MCU in the US. Third, as a non-US person (most people in the world) I would rather appreciate completely open hardware from whatever country as we all know the US is the worst spy country in the whole world.

This sounds really exciting. I’m still pretty much sticking with 8266 d1 minis since my main use is for esphome, and they’re plenty for that. However, my esp32 count is increasing courtesy of Adafruit: first the coprocessor on the Matrix Portal, then the s2 on the MagTag which is really impressive.

Y’all need that Nordic Power Profiler Kit 2 (ppk2), beats your USB cable with resistors any day 😁 I’m definitely just waiting for a legit excuse to get one, I’ve stretched my excuses pretty thin at the moment with my Glasgow order but I was too excited not to order one. The ppk2 looks like a pretty sweet tool based on the Desk of Ladyada streams with it I’ve seen, one of those tools I’m glad to know exists for a pretty decent price.

Checkout X-NUCLEO-LPM01A 1na, can plug and play all/most ST Nucleo/Discovery and has external plug. STM32Cube Monitor-Power software, it has a display for stand alone use.

Does this still use the same BLE stack(Bluedroid) as the normal ESP32? it’s buggy and is HUGE!

On both ESP32 and ESP32-C3 you have a choice between bluedroid and NimBLE. The latter has noticeably smaller footprint.

Hi all, I just made a bitcoin ticker using ESP32-C3 eng sample board and trying to google if this is the first RISC-V bitcoin ticker ever or not – so far I did not found any such, so maybe it is :D

Here is the code: https://github.com/BugerDread/cryptoticker-esp32-c3

Yesterday I got a LILYGO® TTGO T-OI PLUS RISC-V ESP32-C3 V1.0. To profit of the low deep sleep current consumption in deep sleep the LEDs are not helpful. Can I switch off the LEDs during deep sleep by disableing GPIO or is there another possibility. I looked for schematics but did not find anything.

I wrote an integer Mandelbrot for all ESP32s and use this as benchmark. The ESP32 with one core needs 39 seconds and 21 seconds with two cores, the ESP32-S2 needs 122 seconds and the ESP32-C3 needs 193 seconds for 67 Mandelbrot images. See https://www.youtube.com/watch?v=ixST3fynV-g. The datasheet current consumption in RX 802.11b/g/n 20MHz are 63mA for -S2, 84mA for -C3, 97mA for ESP32 and 94mA for -S3. The available RAM in Arduino IDE is 208 KByte for ESP32-S2 WROOM, 235 K for ESP32 WROOM, 312 K for ESP32-C3, 2251 K for ESP32-S2 WROVER and 4328 K for ESP32 WROVER. See https://www.youtube.com/watch?v=Qs6JleTzCRA

I prefer the ESP32-S2 over the ESP32-C3. But I agree: every penny counts and mayby the -C3 is the new mass market star. But for me as “maker” I want to have the ESP32-S3 and use SIMD (in assembler) for even faster Mandelbrot calculation.

Benchmark?

Please have a look to https://ckarduino.wordpress.com/benchmarks/

FWIW, Toit is being adapted to the C3 as we speak:

https://github.com/toitlang/toit/issues/89

Hi, I already know this article is a bit old, but I would like to ask something. Has anyone done any tests with a working and connected wifi? What I would like to say is that the ESP8266RTOS (and the Arduino framework) blocks ALL user activity and ALL interrupts for 1-2 seconds when negotiating a new connection (in the ESP32 the situation is mitigated due the dual core). Under these conditions, real-time applications are almost impossible. Does the ESP32-C3 have the same … “issue” ?