Venus hasn’t received nearly the same attention from space programs as Mars, largely due to its exceedingly hostile environment. Most electronics wouldn’t survive the 462 °C heat, never mind the intense atmospheric pressure and sulfuric acid clouds. With this in mind, NASA has been experimenting with the concept of a completely mechanical rover. The [Beardy Penguin] and a team of fellow students from the University of Southampton decided to try their hand at the concept—video after the break.

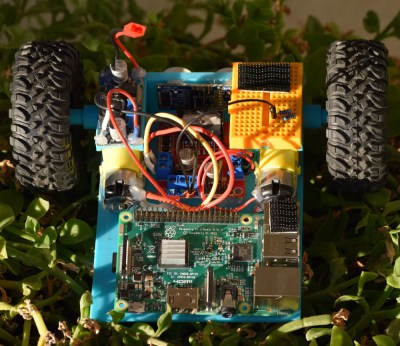

The project was divided into four subsystems: obstacle detection, mechanical computer, locomotion (tracks), and the drivetrain. The obstacle detection system consists of three (left, center, right) triple-rollers in front of the rover, which trigger inputs on the mechanical computer when it encounters an obstacle over a certain size. The inputs indicate the position of each roller (up/down) and the combination of inputs determines the appropriate maneuver to clear the obstacle. [Beardy Penguin] used Simulink to design the logic circuit, consisting of AND, OR, and NOT gates. The resulting 5-layer mechanical computer quickly ran into the limits of tolerances and friction, and the team eventually had trouble getting their design to work with the available input forces.

Due to the high-pressure atmosphere, an on-board wind turbine has long been proposed as a viable power source for a Venus rover. It wasn’t part of this project, so it was replaced with a comparable 40 W electric motor. The output from a logic circuit goes through a timing mechanism and into a planetary gearbox system. It changes output rotation direction by driving the planet gear carrier with the sun gear or locking it in a stationary position.

As with many undergraduate engineering projects, the physical results were mixed, but the educational value was immense. They got individual subsystems working, but not the fully integrated prototype. Even so, they received several awards for their project and even came third in an international Simulink challenge. It also allowed another team to continue their work and refine the subsystems. Continue reading “Clockwork Rover For Venus”