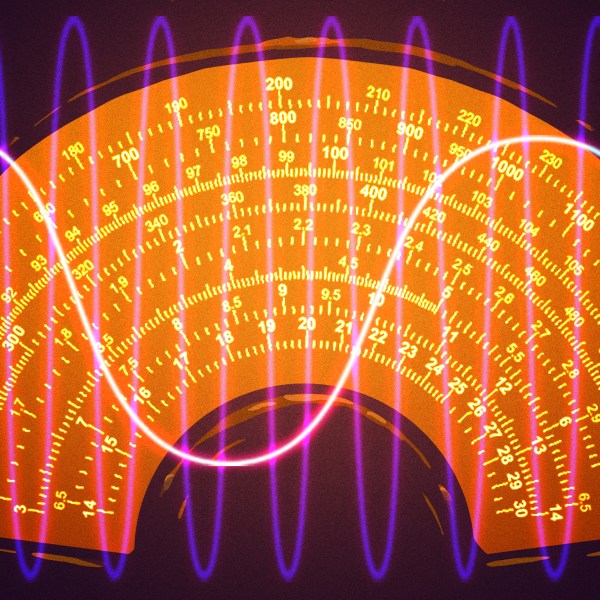

[Benn Jordan] had an idea. He’d heard of motion amplification technology, where cameras are used to capture tiny vibrations in machinery and then visually amplify it for engineering analysis. This is typically the preserve of high-end industrial equipment, but [Benn] wondered if it really had to be this way. Armed with a modern 4K smartphone camera and the right analysis techniques, could he visually capture sound?

The video first explores commercially available “acoustic cameras” which are primarily sold business-to-business at incredibly high prices. However, [Benn] suspected he could build something similar on the cheap. He started out with a 16-channel microphone that streams over USB for just $275, sourced from MiniDSP, and paired it with a Raspberry Pi 5 running the acoular framework for acoustic beamforming. Acoular analyses multichannel audio and visualizes them so you can locate sound sources. He added a 1080p camera, and soon enough, was able to overlay sound location data over the video stream. He was able to locate a hawk in a tree using this technique, which was pretty cool, and the total rig came in somewhere under $400.

The rest of the video covers other sound-camera techniques—vibration detection, the aforementioned motion amplification, and some neat biometric techniques. It turns out your webcam can probably detect your heart rate, for example.

It’s a great video that illuminates just what you can achieve with modern sound and video capture. Think SIGGRAPH-level stuff, but in a form you can digest over your lunchbreak. Video after the break.

[Thanks to ollie-p for the tip.]

I remember a project where they used a XMOS controller to make a sound camera or at least allowed for moving around the environment virtually to locate sounds.

Shocking… (not really though)

“With its onboard XMOS interface, the UMA-16 is the perfect fit for the development of beam-forming algorithms or your DIY acoustic camera.”

https://www.minidsp.com/products/usb-audio-interface/uma-16-microphone-array

Acoustic processing using Python? This is one of those times where choosing the right programming language matters. There is no way you will get anything close to realtime processing using Python… which is why the project renders results from recordings.

If you try to solve all problems at once, you will end up solving none of them.

What programming language and hardware would you use to make it real time, out of curiosity? Would FPGA’s or OpenCL on graphics cards be feasible or overkill? I’ve never used either myself. Cheers, keith

On a given platform, I would expect any flavor of C or other decently compiled language to be able to process faster than Python which is an interpreted language.

This is not as simple as you think.

Python really isn’t the issue here, getting a functioning framework is, which putting is well suited to and has a massive trove of optimized libraries work in C and assembly to do the heavy lifting when you actually get to that point.

Python with Numpy and Jupyter notebooks is the open source alternative to MATLAB. Add in SciPy, OpenCV, and PyTorch, and you’ve got a very powerful ecosystem for signal processing.

The things that need to be efficient are handled in the libraries, and are written in C or C++. Python is just the coordinator.

i detest python but it seems to me like there will be a kernel that needs to be hand-optimized loops. but then there will be a lot of high level code that you’ll want to futz with a lot until you get it right, where flexibility will be more valuable.

i downloaded the source to acoular and it really exemplifies why i don’t like python. but just chasing one expensive operation to its base, the bulk of the work is using scipy fft. i would guess (or hope) that scipy has an efficient fft implementation even though the interface is python.

so i do think python really handicaps you, even used for these high level tasks (every python program is slow, no exceptions)…but i’m not convinced this operation could approach realtime without a much more sophisticated effort than simply rewriting it in C. i suspect the bulk of the time is already spent in optimized C code.

In these cases, python is used to configure the tools. The tools are written in C/C++. Just like pyTorch can do AI. Python just configures the neural network. The C AI tools do most of the heavy lifting.

You can import numba and “write C program” in python syntax.

The Acoular library is using Numba (https://numba.pydata.org/) to compile speed-critical sections of Python.

One of the reasons Python is popular for number crunching in data science and scientific computing is that many of the popular libraries are actually written in C/C++. In addition to that, projects like Cython and Numba allow you to selectively compile specific Python functions for speed reasons.

You write your program in Python, then you profile it, find the 2% of the code that takes 98% of the time, and optimize that by compiling it with Cython/Numba or rewriting it in C/C++.

Great video. I do wonder about the thermal imaging of the speaker though. On it’s face, I agree that sound should generate heat, but the speaker will heat up much much more from the electrical energy used to drive it. I think if you put two speakers in a “large enough” sealed box, and only drove one of them, the second one would still move but be isolated from any heat radiating from the first one. That would be a closer model for measuring heat from sound. Maybe you did do that and just didn’t explain it?

Right! The experiment should be an object that absorbs sound, and gets warm from that. I would expect a loudspeaker to be mostly heat. Does anyone have numbers on that?

Efficiency of a driver is about 2%. That means 98% is heat. Even the best speakers are not better than 5% efficiency.

I’m 100% sure things heat up when being hit with sound waves, but I think it’s gonna be so minute it would be impossible to measure with an off the shelf thermal camera. Also heating from other sources like light, dissipation to the environment and thermal innertia would make it even harder to detect it in any normal use case.

Did you know that even cheap thermal sensors have very low thermal sensitivity. For example Infiray P2 (250$) has a NETD<40 mK.

Interesting video, but please, where is the list of links and references to allow others to follow-up?

Would it be able to work with (very) low freq ?

E.g. to locate the “hum” ?